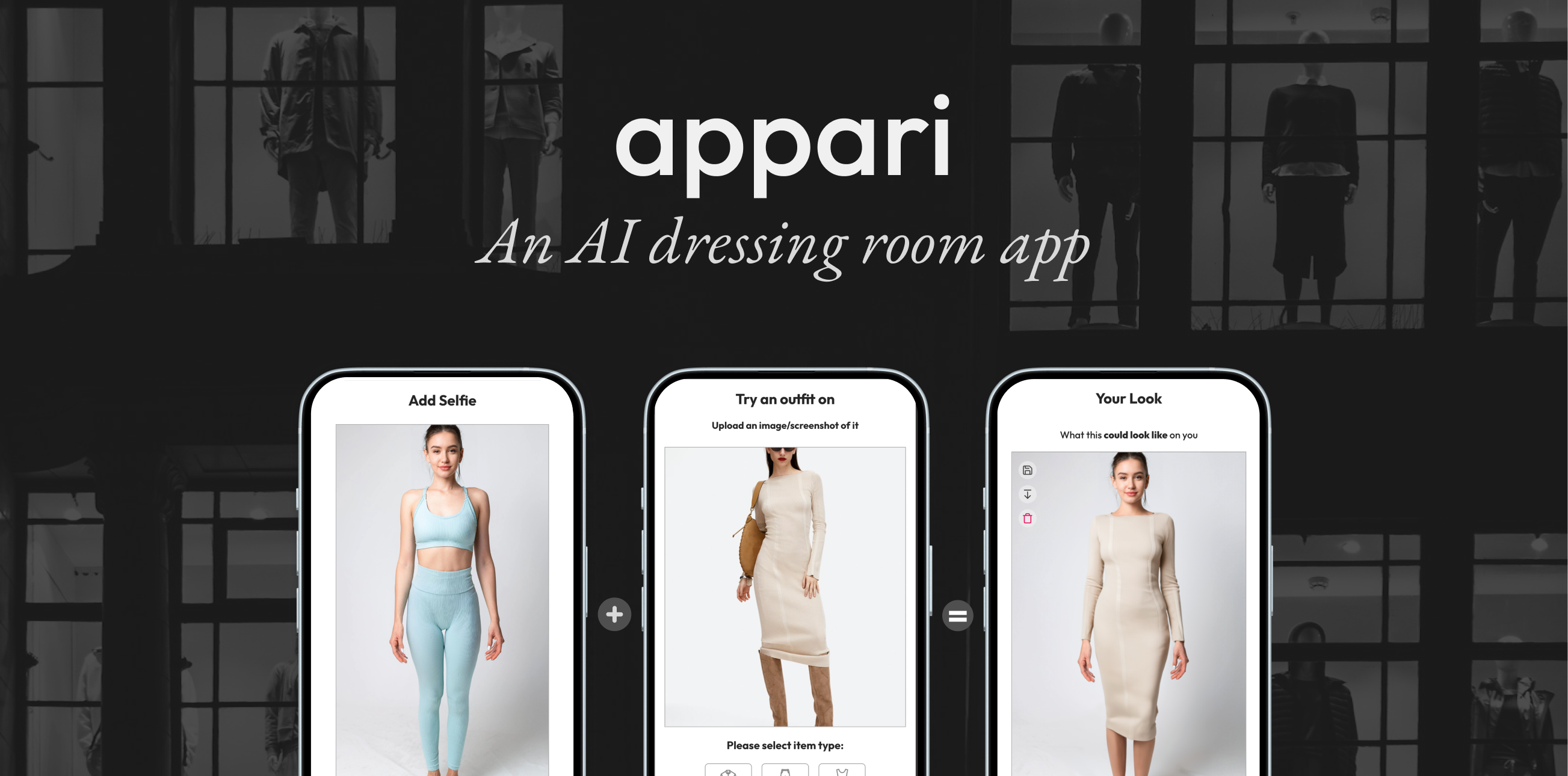

Appari is a gen AI try-on app that helps shoppers visualise clothing on themselves before buying. I led the end-to-end design of the MVP from user research and strategy through to a live product with 250+ early users and two retail partners signed for pilot testing.

The goal was to reduce purchase uncertainty, lower return rates, and give users confidence in their online fashion choices through a simple, mobile-first experience.

Role

UX Visionary

Link

appari.ai

Medium

Mobile App & Website

Tools

Figma, VS Code, ChatGPT, Claude, Vue.Js, Tailwind

Shopping for clothes online is convenient until it isn't. Appari set out to solve common, frustrating problems shoppers face:

Issues

This leads to customer frustration, lower purchase confidence, and costly return rates for retailers.

The challenge: How might we help users see how clothing will look on them before they buy - simply, quickly, and visually?

The goal was to build an MVP that would allow users to upload a selfie and try on clothing virtually using AI. It needed to be intuitive, frictionless, and visually compelling — without requiring complex onboarding or body scanning.

I conducted research with 340+ participants through interviews and surveys, uncovering key insights:

"I usually buy two sizes, try them on, and return one. It's wasteful"

"If I could see how it fits before buying, I'd shop online more often"

"I want to try the dress before ordering"

I analysed existing solutions including Zyler, True Fit, and various AR try-on tools that had been made.

Key gaps identified:

Design a mobile-first, cross-platform solution that works with any retailer.

I prioritised features for the initial launch based on user needs and technical feasibility:

Must-have (Phase 1):

Primary flow:

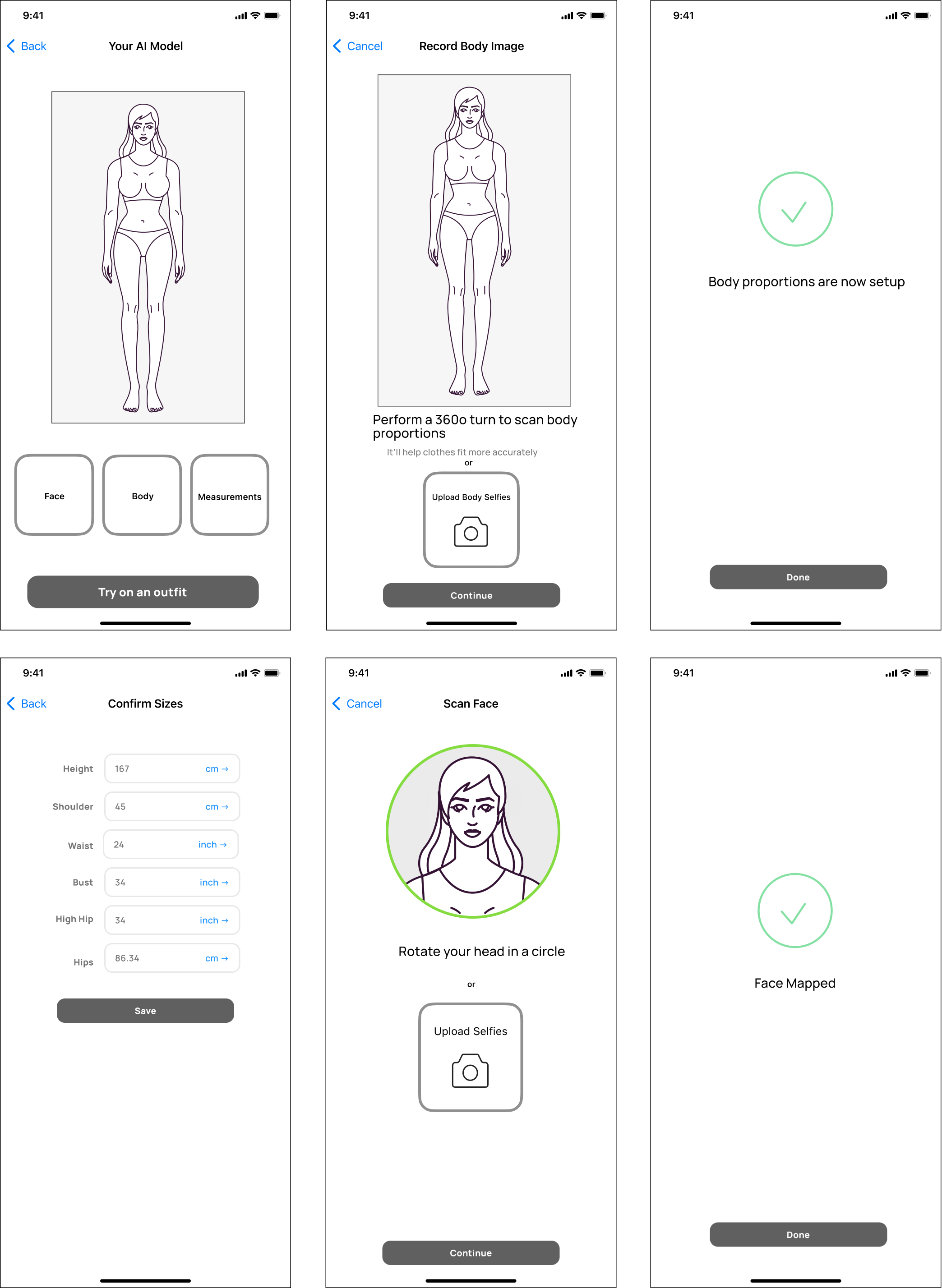

To create the most accurate digital twin.

a - Scan face & body with camera or upload images

b - Input body measurements for accuracy

Discussion with potential users:

- Too long winded, too many steps. Needs to be simpler

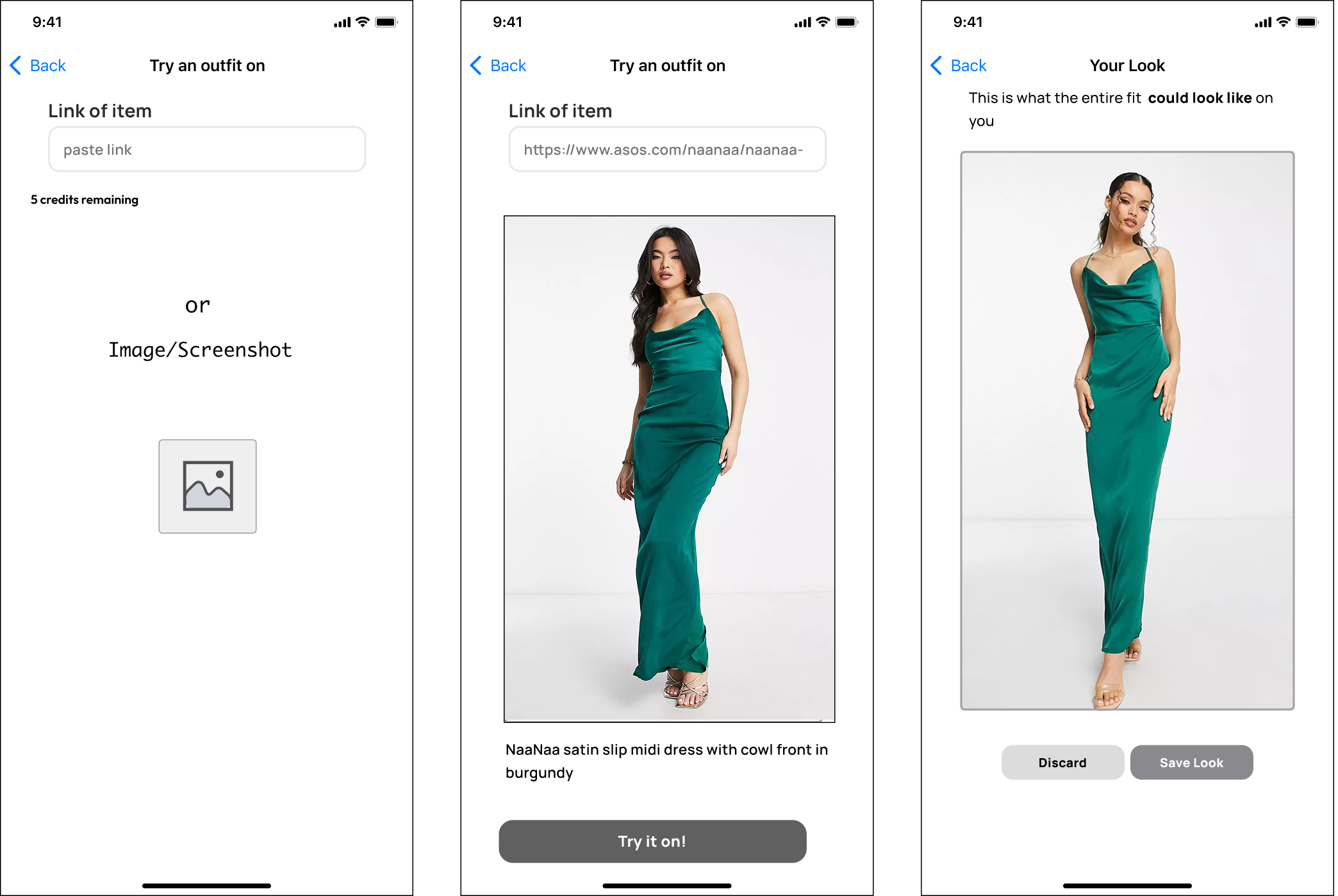

a - Option to input link or screenshot/image of the items.

I added images/screenshots as clothes can be captured from influencers and images friends share.

b - Clothing is remodelled on the user's digital twin.

Feedback:

This is simple and straight forward.

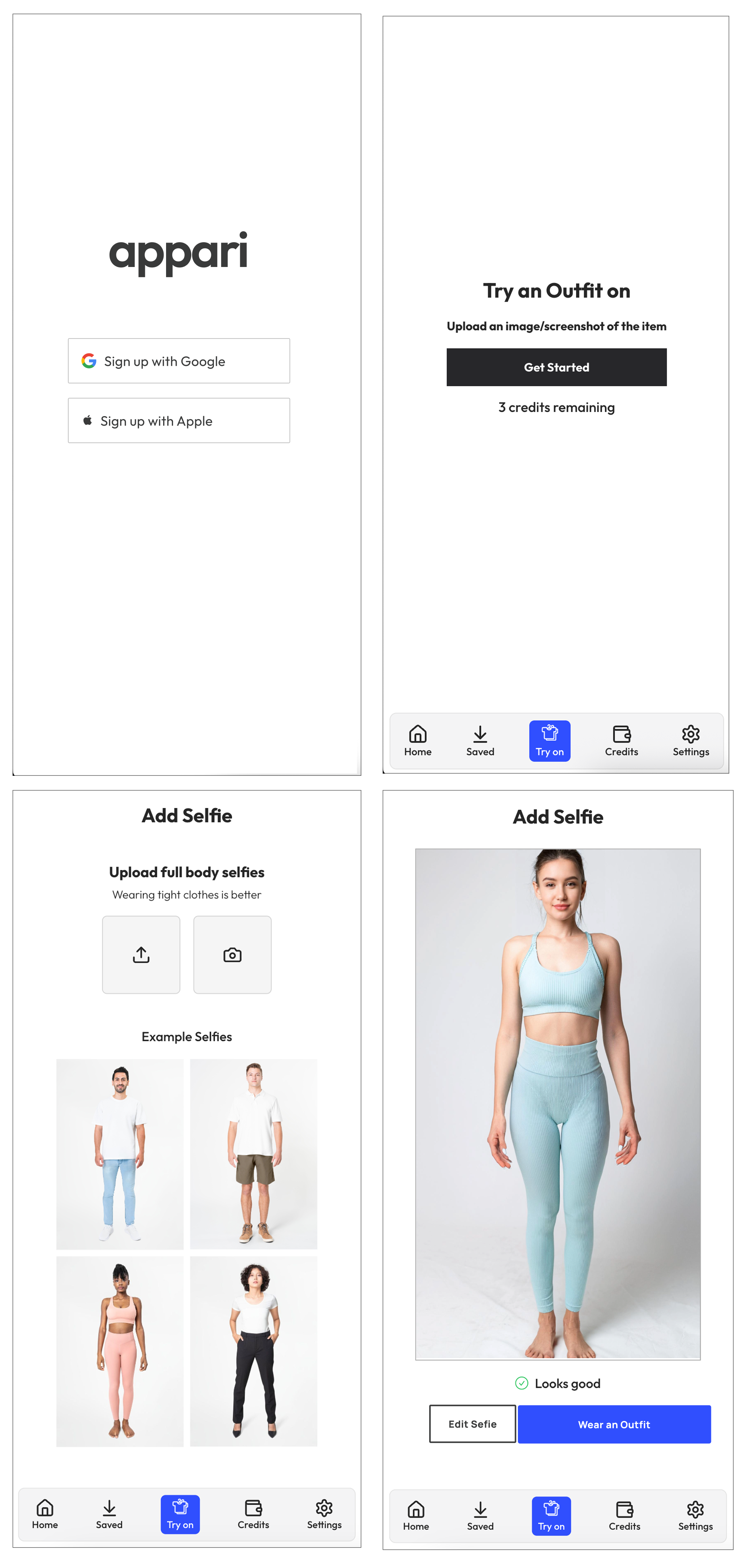

I decided for the initial concept, we should see if people actually can integrate this type of tool into their shopping flow as it's a new way of doing this. I decided to simply the AI model to just a full body selfie as Gen AI is able to work with that.

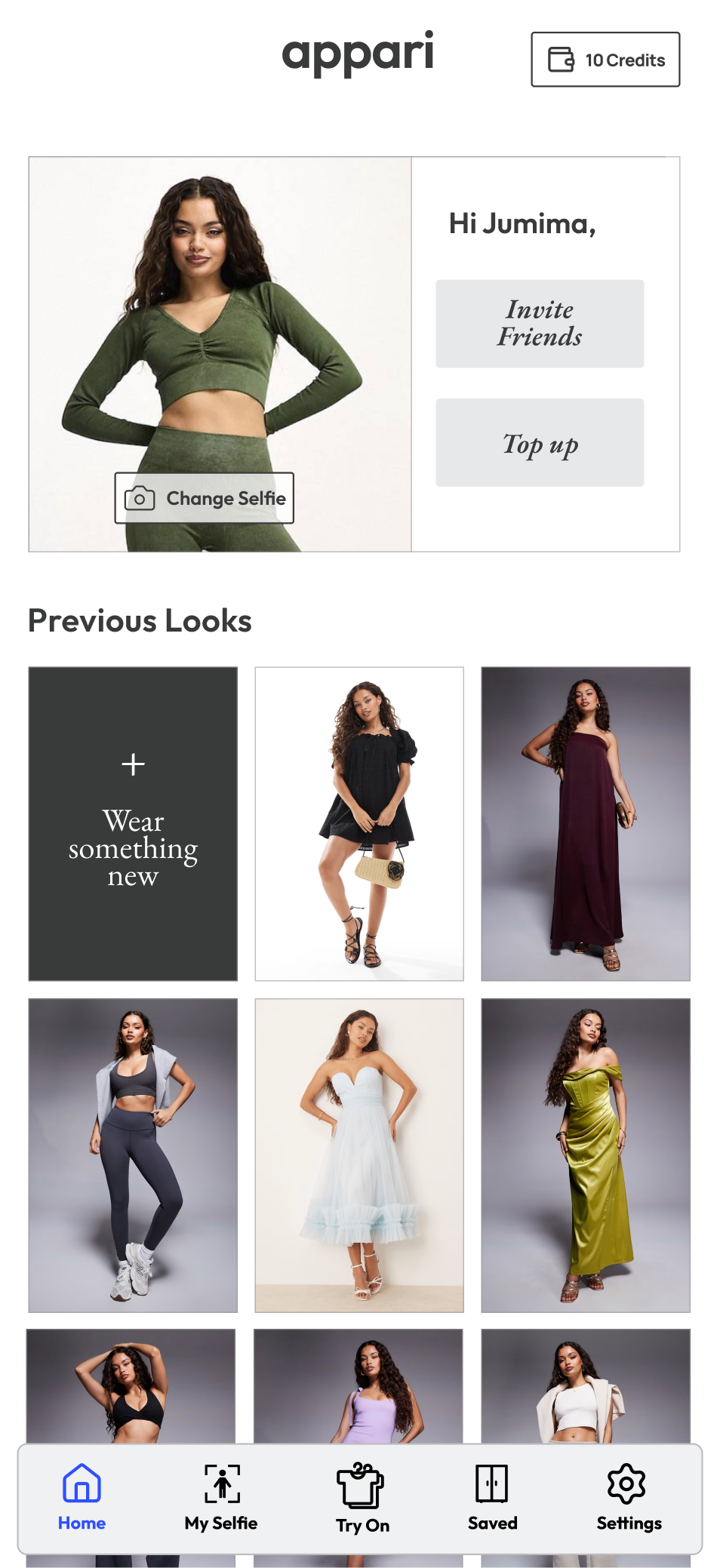

a - Simple onboarding with Google login

b - Single full body image with instructions for best output.

c - Users can take a photo or upload something from their camera roll

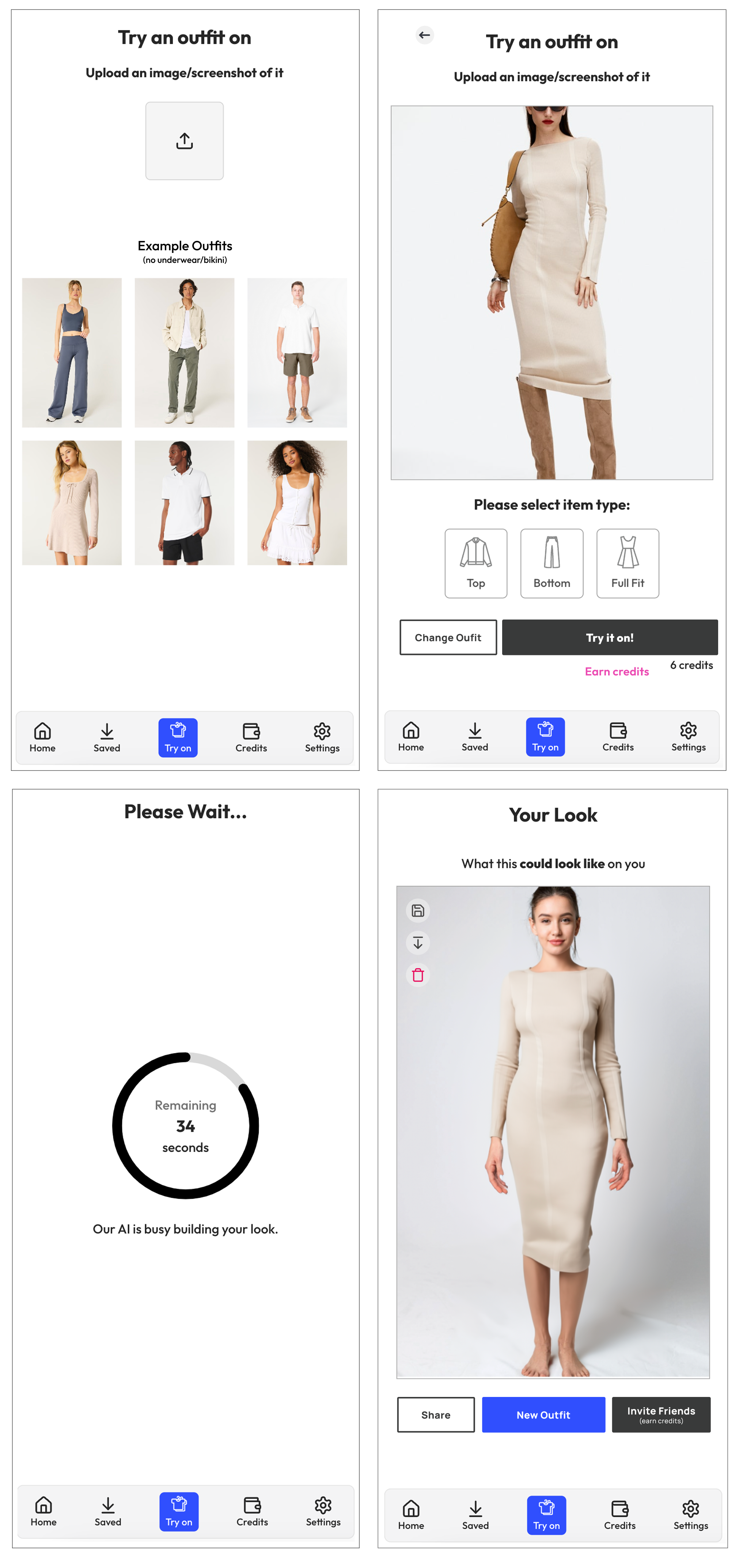

c - Removed url paste for simplicity, added instructions for garments so the gen AI can be more accurate

d - A preview of the outfit is shown so user can change it if they selected the wrong image

e - Garment type is put in so more accurate results can be achived

f - Final output screen

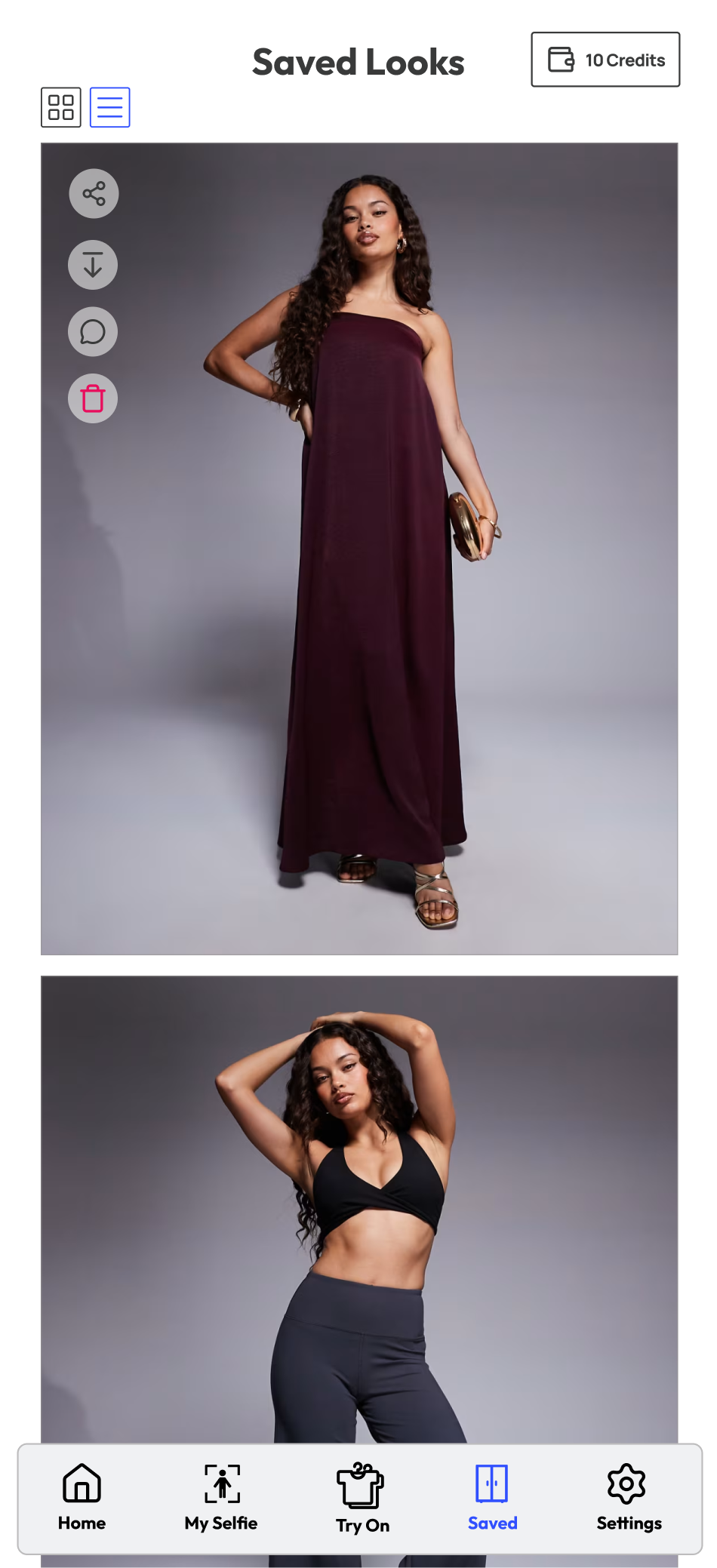

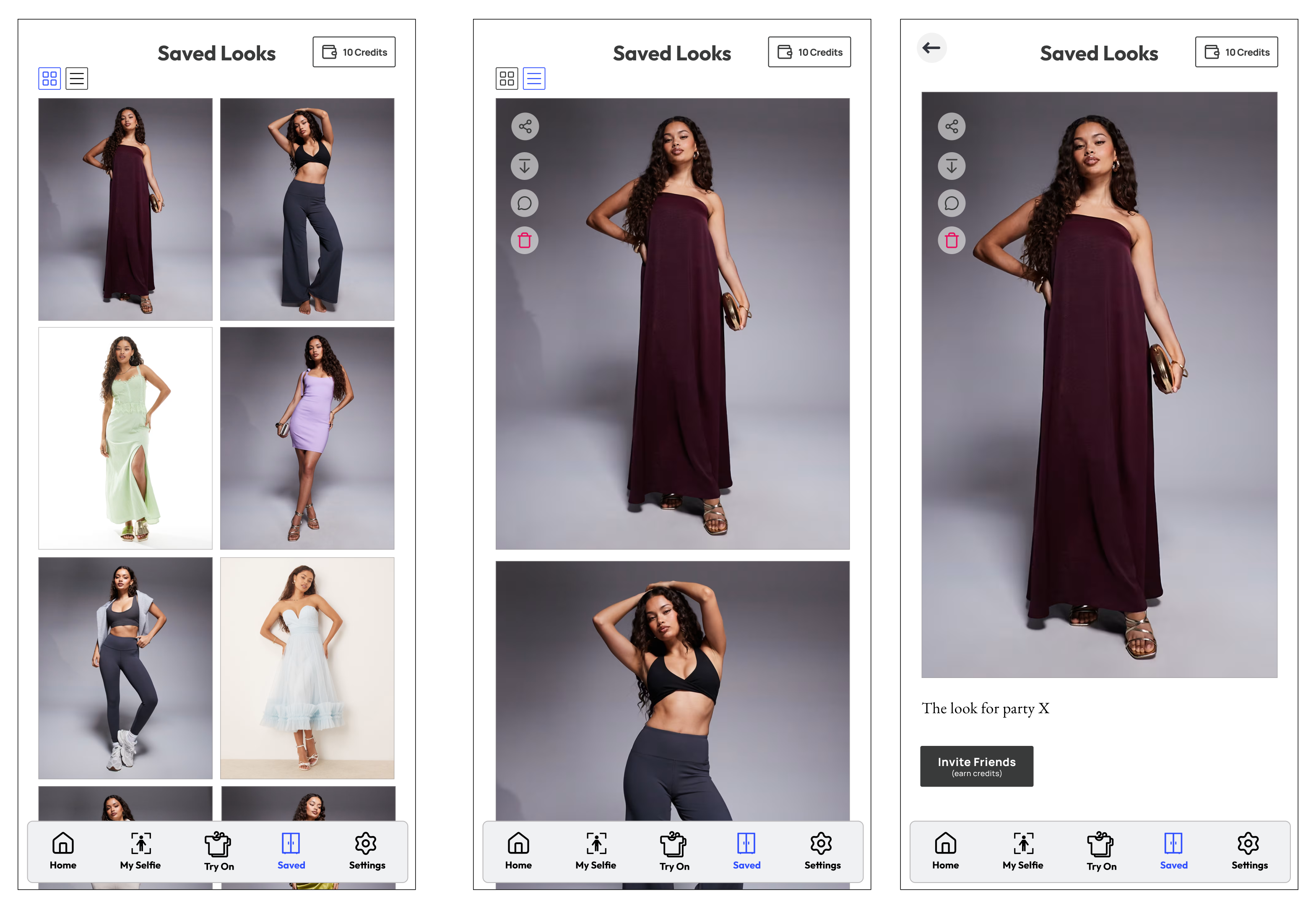

Goal: People can look at their saved clothing for when they make buying decisions, write notes and share them with others.

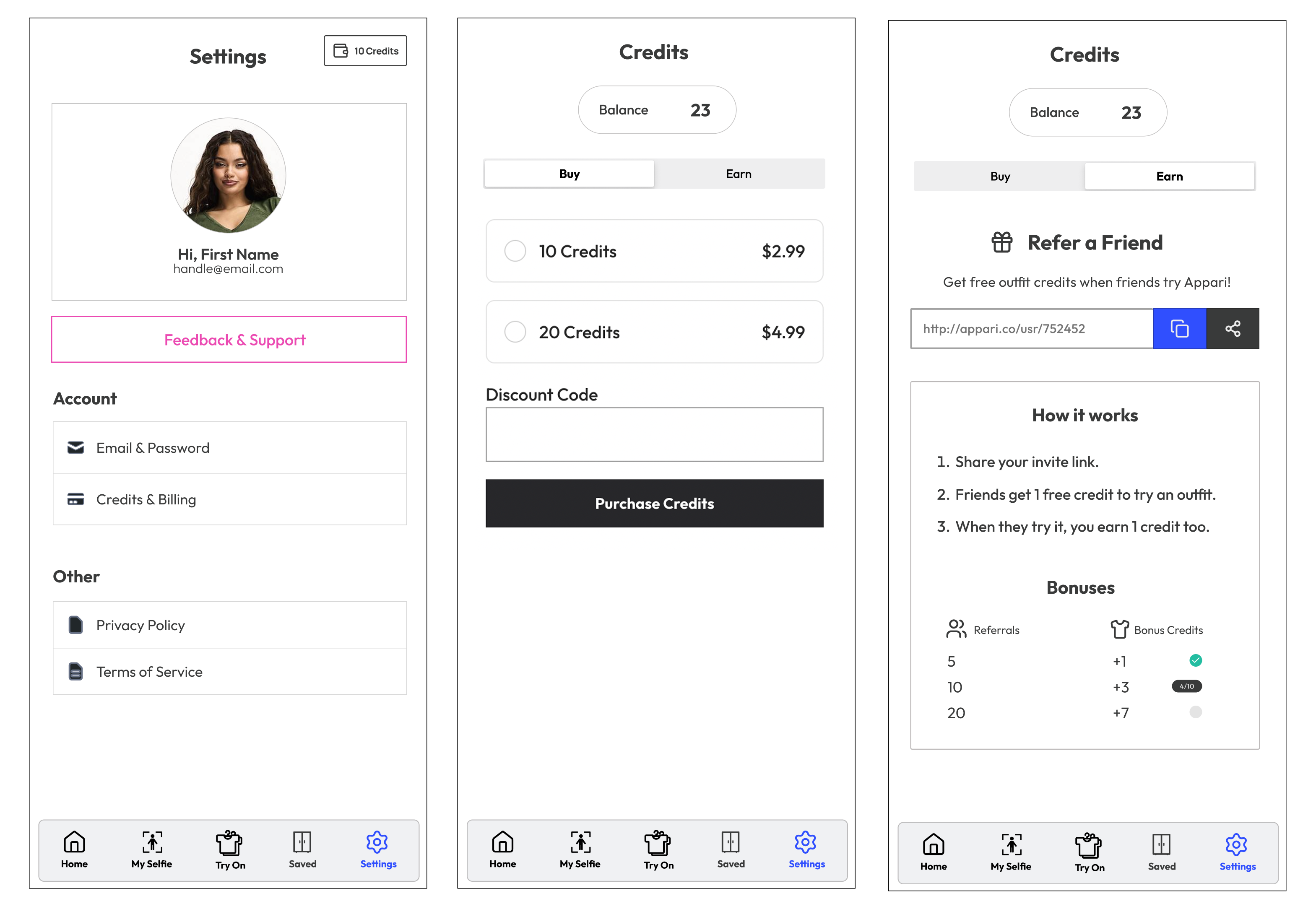

Goal: User is able to do basic account tasks and look at their outfit credits, buy more and refer friends.

Based on user feedback and research, the next design priorities include:

Long-term vision: Create an experience where people can visualise themselves in any outfit from any retailer, building confidence and reducing the friction of online fashion shopping.